(Pixabay/Gerd Altmann)

Sometime before December 2019, Bishop Paul Tighe, secretary of the Pontifical Council of Culture, and Michael Koch, then the German ambassador to the Holy See, had a series of discussions on the long-term societal and philosophical ramifications of artificial intelligence that led them to jointly sponsor a symposium, "The Challenge of Artificial Intelligence for Human Society and the Idea of the Human Person."

Originally planned for June 4, 2020, the symposium, finally held on Oct. 21, 2021, was the first in-person conference hosted by anyone from the Vatican Curia since the outbreak of COVID-19. Along with the six presenters, two moderators and two chairs, Cardinal Gianfranco Ravasi, council president, and Ambassador Bernhard Kotsch, Koch's successor, hosted more than 100 attendees: many ambassadors, journalists, local faculty and members of the Vatican Curia at the famed Palazzo della Cancelleria.

The topic was not a new interest of Tighe's. In September 2019, his office, along with Cardinal Peter Turkson's Dicastery for Promoting Integral Human Development, hosted a three-day seminar on "The Common Good in the Digital Age" with leaders from the digital industry and from concerned nongovernmental organizations, as well as members of the academy and the Curia. Tighe is a natural at bringing together a wide variety of disparate stakeholders as necessary interlocutors.

There were three seatings of the Oct. 21 symposium.

The first panel, made up of a scientist, a philosopher and a theologian, was charged to clarify how and to what extent the emergence of AI requires us to rethink what it means to be human.

The second panel covered the prescriptive and normative consequences that AI raises. The panel consisted of a world-renowned human rights lawyer, a social ethicist and the European head of the world's largest technical professional organization, IEEE (Institute of Electrical and Electronics Engineers).

Jens Redmer, principal for new products at Google, moderated the first panel and Don Bosco Sr. Alessandra Smerilli moderated the second panel. Smerilli is an economist who, as the interim secretary of the Vatican Dicastery for Human Development, is one of the highest-ranking women in the Vatican Curia.

The third was a roundtable of the six of us, where the former ambassador, Michael Koch, provoked us with a set of questions about humanity and hope.

'A future where the boundary between carbon-evolved and silicon-designed life becomes ever more porous is approaching at warp speed.'

—Christof Koch

Here, I offer seven lessons that I learned from the symposium as one of the presenters.

First, the advances of artificial intelligence are staggeringly more rapid than anyone ever anticipated. If climate change isn't happening quickly enough for you, then turn to artificial intelligence to give you a fright.

Christof Koch, chief scientist of the MindScope Program at the Allen Institute for Brain Science in Seattle, was the first panelist to speak and brought us all to an appreciation of the urgency of the moment with these compelling words:

A future where the boundary between carbon-evolved and silicon-designed life becomes ever more porous is approaching at warp speed. Supervised and unsupervised deep machine learning allows computers to listen and answer appropriately. Computers and their siren voices, linguistic skills, knowledge and social graces will become indistinguishable from those of high-functioning humans — flawless, creative (e.g., move 37 in the second game of the Go match of AlphaGo against Lee Sedol), and ubiquitous in homes, industry, entertainment and, inevitably, warcraft.

Tech's demiurges will fashion digital creatures, first virtual but eventually with humanoid, quadruped, insectoid or avian bodies. Assuming no planetary nightfall, these trends, accelerated by the pace of progress in machine learning, databases and hardware (e.g., quantum computers, bipedal robots), are all but irreversible and will profoundly affect humanity's future, including whether we have any.

Christof Koch's opening was unsettling.

Second, the discourse on artificial intelligence is occurring within very different language games. Lawyers speak legalese; theologians, theology; technicians have tech talk; and social scientists their own ways of reporting. Each field has not only its own way of conceptualizing but also different ways of assessing and judging.

Scientists' concerns are very different from the market forces that drive the inevitability of AI and these are different from the regulatory controls articulated by international law experts or the normative inquiries of engineers at IEEE. We talk past one another, not knowing what the other "experts" are saying.

Still, no single field of inquiry can supply adequately the array of concepts necessary to subdue, let alone study sufficiently the phenomenon of AI. Christof Koch is one of those exciting leading scientists able to be at the forefront of brain investigations, with a predilection for Aristotelian philosophy and a journalist's capability to write regularly for Scientific American.

But neither he nor anyone else could command the topic, such is its reach. Instead, what we learned, and what Tighe had designed, was that the symposium occasioned the necessity to bridge these language games by interfacing them with significant human actors. Moreover, each of us, like the philosopher Matthias Lutz-Bachmann, was in fact a polyglot of such language games: Originally a Kantian with deep Aristotelian bias, he found facility in human rights discourse.

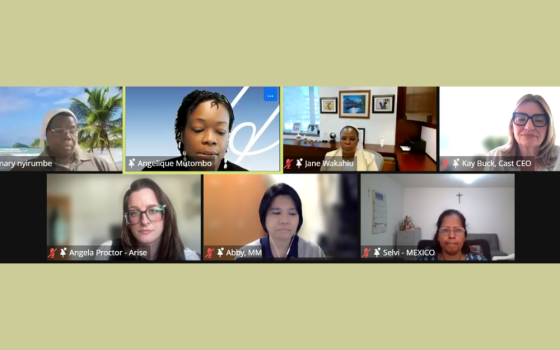

A symposium on "The Challenge of Artificial Intelligence for Human Society and the Idea of the Human Person," organized by the Vatican's Pontifical Council for Culture and the German Embassy to the Holy See, is held Oct. 21 at the Palazzo della Cancelleria in Rome. (Courtesy of the Pontifical Council for Culture)

Third, the human being stood as both a contradiction and as a mirror to AI. In our first panel, Koch argued convincingly that "these computers, no matter how functionally proficient, do not have high intrinsic causal powers and therefore cannot have subjective experiences."

Noting that machines cannot suffer, he stated, "Even an accurate computer simulation of a human brain would not be conscious, although the machine would convincingly fake the appearance of being conscious."

He concluded: "Machines are about doing, not about being. Indeed, in principles, machines can do everything humans can but be nothing."

To make his point all the more clear, in response to a question, he commented that AI is as close to being a human being as a washer machine is!

Lutz-Bachmann followed up with an eloquent dissent to "the necessity for a so-called 'post-humanist' adaptation of the understanding of the 'human person' to smart systems." Like Koch, he insisted that humans would not and should not be subsumed under a concept of agency in the future that could include AI and humanity as equal partners.

While Koch saw suffering as that which denied the consciousness of AI, Lutz-Bachmann established the "intersubjectivity" of the human community as a quality that AI could never appropriate.

Still, I proposed that our human self-understanding should inform how we design AI. Wanting to replace the reductive idea of the human as rational or the dystopian claim of the human as a new creator, I proposed a capacious understanding of the human as vulnerable, that is, not as wounded or in need, but more like the philosopher Judith Butler's claim that vulnerability essentially is what most qualifies myself as being bound to and among others; it is what allows me to be ethically responsive or answerable to the other.

This is a notion of vulnerability that we find in our God who in Jesus Christ is revealed as vulnerable for us and even answerable to us. Like Jesus, who cried out to God on the cross, we pray, believing inevitably that God is answerable.

These notions of vulnerability and answerability ought to inform the end of our design of AI.

Fourth, while the US has not developed any clear regulatory guidelines regarding AI, Michael O'Flaherty, director of the European Union Agency for Fundamental Rights, highlighted the importance of putting the human at the center of AI development and use. Showing that human rights provide the necessary framework to articulate such regulations, he used the language of risk to make the designers of AI more transparent and accountable.

In my estimation, his risk-based regulatory approach provided a refreshing assurance that despite the hands-off approach of the U.S., at least the European Union knew that designers of AI cannot work without being held as answerable.

Fifth, still, not all norm-makers are equal. While Google has made a commitment to establishing a do-no-harm set of guidelines in their development of AI research policies, still leaving the private, profit-driven sector in the U.S. as fundamentally unregulated stood in contrast with not only O'Flaherty's work in the European Union, but also with Clara Neppel's work with the IEEE.

The not-for-profit IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity and stands as an excellent resource for developing ethical guidelines for holding designers and researchers responsible.

Advertisement

Sixth, the present trajectory of AI is, then, a cause of hope and fear. The promise of a vulnerable and answerable AI emerges as greater regulatory standards are articulated and enforced on AI's designers, but as Smerilli noted, there are very few voices advocating to share the promise of AI beyond the industrialized world.

Though regulators of AI designers might inhibit the risk of AI eventually overpowering us, they do not effectively address the issue that the industry needs to be better aimed at equitable distribution of AI resources and outcomes. Indeed, the profit-driven world of technology remains on course to further empower and enhance those who can afford it.

Moreover, when Michael Koch asked us during the roundtable whether we were hope-filled about AI, my other panelists asserted that they were, in part because of the regulatory work of the EU and IEEE. In fact, social ethicist Dominican Sr. Helen Alford underlined the insight that AI could not force us to act.

I, on the other hand, wondered aloud about whether our confidence in regulations was overstated. As a man approaching my 70s, I wondered how welcoming the experience of AI would be to the elderly. Already, most of us fear answering a phone that more frequently is a call from AI.

I agreed that I might not be forced to act, but I certainly could be manipulated and confused by the world of AI. Were we adequately aware of how intimidating the world of AI was likely to become for those in much more precarious situations than ourselves?

Michael Koch asked us a second question about hope, about whether the line between the human and AI would hold. Here again, I was a party of one. Where the others insisted that the difference between AI and humanity was clear (AI could not suffer, could not be intersubjective, needed to be regulated), I suggested that I could foresee, 15 years from now, AI taking the role of the resident director in one of our dorms here at Boston College. Were we to ever terminate that AI from providing its service, arguing that it was no more than unplugging a washer, I imagined my undergraduates protesting our heartlessness as we terminated their resident director. I also dreaded the predictable calls from their parents!

Admittedly, AI might not be a human person, but that doesn't mean we will not act otherwise.

Here was the seventh lesson to emerge: Like the work of regulations, the human inclination to line-drawing is conceptually very reassuring, but humanity is not only drawn to concepts. While we might be getting our categories right about AI, we might need to anticipate not only how things should be, also how they will be, when, after all, the human is as easily inclined to crossing a boundary as they are assured by having one.

Indeed, through these final discussions, I was reminded of the opening words of Reinhold Niebuhr in The Nature and Destiny of Man: "Man has always been his most vexing problem. How shall he think of himself? Every affirmation he makes about his stature, virtue, or place in the cosmos becomes involved in contradictions when fully analyzed."